Project: Sentiment Prediction (Tweet)

- Fabien Cappelli

- May 27, 2025

- 6 min read

Project Background and Objective

The goal of this project is to build a functional prototype of an Artificial Intelligence capable of accurately predicting the sentiment (positive or negative) associated with English-language tweets.

In the absence of internal data, the project relies on a large open-source dataset of approximately 1.6 million tweets, each labeled with a binary sentiment (positive or negative).

The ultimate objective is to develop a high-performing model, accessible via a cloud-based API, and usable through an intuitive web interface.

MLOps Principles

The goal is also to implement a workflow aligned as closely as possible with the key principles of MLOps.

1. ML Lifecycle Automation

All stages of a machine learning model’s lifecycle — from data exploration to deployment, including training and monitoring — should be automated and orchestrated.

This includes data collection, preprocessing, training, testing, deployment, monitoring, and maintenance.

As we’ll see later, all of this is managed in our project using MLflow and GitHub.

2. Continuous Integration and Continuous Deployment (CI/CD)

MLOps adapts DevOps practices to machine learning by emphasizing continuous integration (automated testing, code and data validation) and continuous deployment (fast and reliable production rollout of models).

In this project, CI/CD is handled primarily through GitHub Actions, along with DockerHub and Azure.

3. Version Control

We aim to version not only the code, but also the data, models, and configurations to ensure reproducibility and proper tracking of all changes (experiments, deployed models, datasets used).

In our project, this is managed through MLflow and GitHub.

4. Monitoring and Traceability

Implementation of tools to monitor model performance in production (accuracy, data drift, concept drift).

Model traceability: being able to know at any time which model is deployed, with which data, and how it was trained.

We will handle alerts via Azure Insights, and ensure traceability via GitHub and MLflow.

5. Interdisciplinary Collaboration

Facilitating collaboration between data scientists, ML engineers, developers, and IT operations.

This involves using centralized platforms, shared pipelines, and common environment management.

6. Scalability and Reproducibility

Ensuring that ML pipelines are scalable (able to handle large volumes of data) and reproducible (producing the same results with the same inputs, with the ability to rerun experiments identically).

7. Security and Compliance

Ensuring the security of both data and models, as well as regulatory compliance — including access control, logging, and auditability.

Data Preparation and Exploration

The initial dataset included not only the tweet texts but also metadata such as the user ID and the publication date.

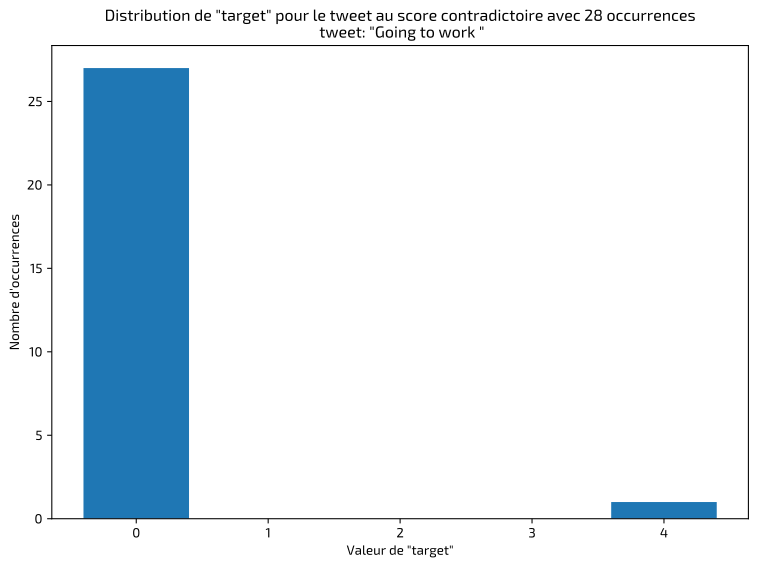

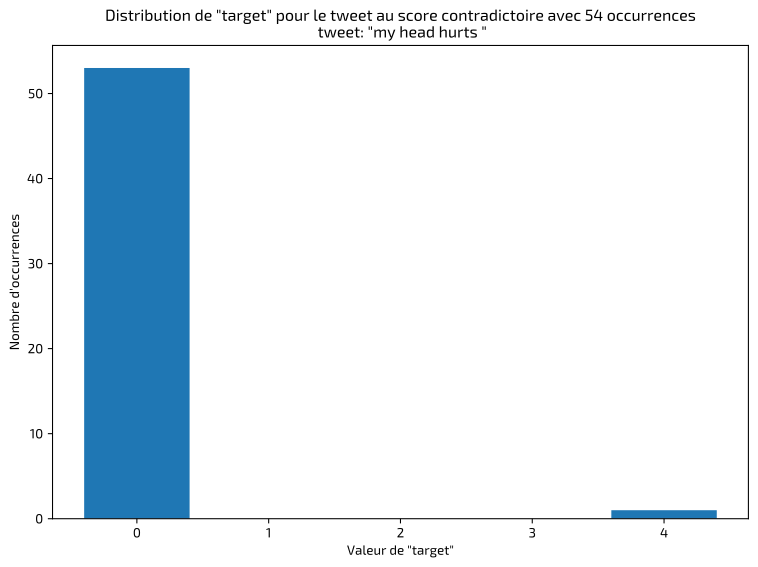

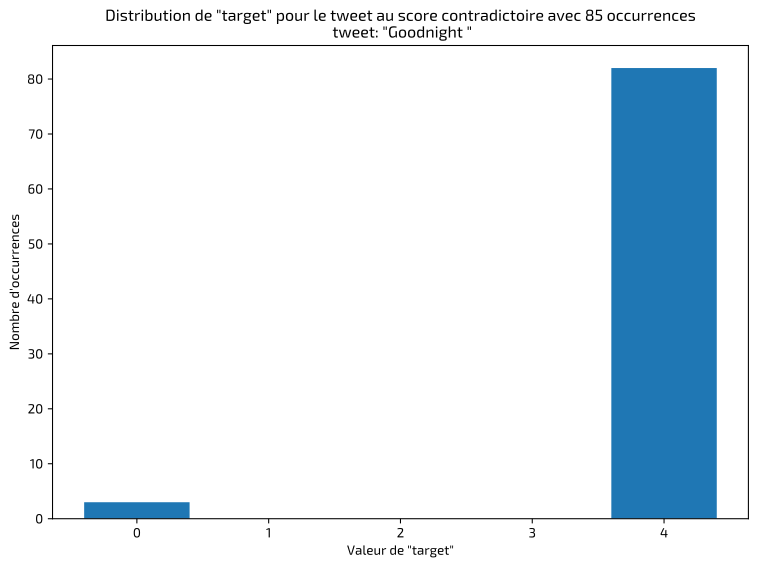

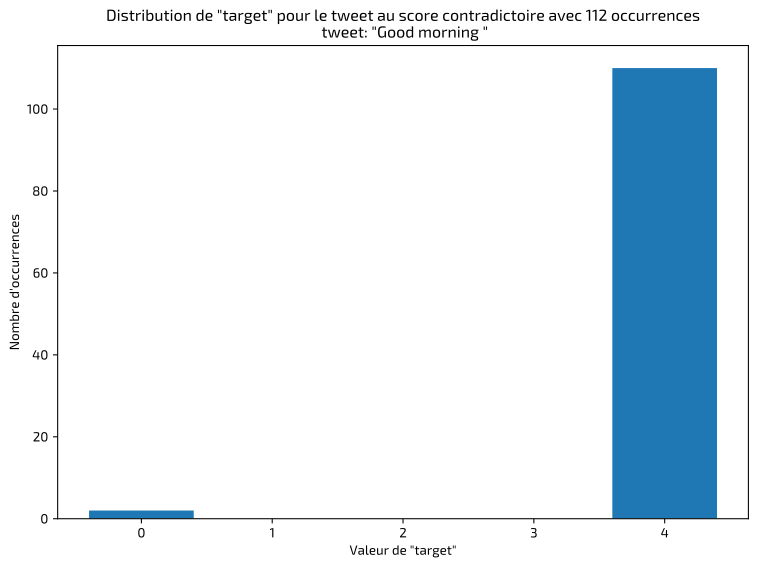

The first step in this type of project is to inspect and validate the data to identify any anomalies or labeling errors. I quickly detected identical tweets that were inconsistently labeled — here are a few examples:

In such cases, I kept the majority label. The final result shows a balanced dataset between positive and negative classes:

Modeling Approaches Explored

Several approaches were methodically explored to identify the most effective and appropriate solution for this specific task.

Hyperparameter Optimization

When training an artificial intelligence model, there are certain settings called hyperparameters. These are parameters external to the model itself — for example, batch size, learning rate, number of epochs, etc.

These hyperparameters are not learned by the model; they must be chosen before training, and their values can significantly impact final performance.

Grid search is a systematic method used to find the best hyperparameters.

Evaluation Criteria and Methods

The models’ performance was assessed using several rigorous metrics:

Accuracy: The percentage of correct predictions across the dataset. Reliable in this case thanks to the balanced data. This is the metric used to select the best hyperparameters.

F1-score: Balances the ability to identify all positive cases while minimizing false positives.

ROC AUC: Measures the model’s ability to correctly rank predictions by their estimated probability.

Inference time: A practical indicator of the model’s complexity and operational footprint.

Data Preprocessing and Informed Vocabulary Selection

Different preprocessing strategies were explored, ranging from simple cleaning (removal of URLs, hashtags, mentions) to more advanced techniques (lemmatization, tokenization using spaCy).Special attention was given to vocabulary selection, determining in a reasoned way how many words to include to effectively cover the corpus while limiting noise and unnecessary complexity.

Model Types

Classic Model (Logistic Regression and TF-IDF)

This traditional approach involves two main steps:TF-IDF vectorization of the tweets, which weighs the importance of words based on their frequency within documents and their rarity across the entire corpus, followed by logistic regression to classify tweets as positive or negative.

Hyperparameter search focused primarily on:

Minimum and maximum document frequency

N-gram size

Regularization parameter (C) to prevent overfitting

Convolutional Neural Network (CNN)

The CNN approach stands out for its ability to detect patterns in textual data.The model uses an embedding layer to transform words into vectors, followed by two convolutional layers (Conv1D) to capture characteristic patterns of expressions typically associated with positive or negative sentiment.

A global max pooling layer summarizes the detected patterns before a dense layer and a final output layer provide the prediction.

Use of Pre-trained Embeddings

While I initially allowed the network to learn its own embeddings, I also tested two pre-trained embeddings to leverage existing semantic richness:

GloVe Twitter: specifically designed to capture the linguistic features of social media.

GoogleNews Word2Vec: more general-purpose, effective with a broad vocabulary but less suited to the informal style typical of tweets.

Critical hyperparameters for this approach included:

Embedding dimension

Number of filters

Kernel size

Dropout rate

Learning rate and batch size

Recurrent Neural Network (RNN – Bidirectional LSTM)

The bidirectional LSTM architecture is particularly well-suited for contextual analysis of long sentences, as it takes into account both the preceding and following context of each word. Two bidirectional layers were used to extract and consolidate the contextual information needed for accurate classification.

Tested hyperparameters included:

Number of LSTM units, determining the model’s memory capacity

Learning rate

Batch size

Transformer Model (DistilBERT)

DistilBERT, a compact and optimized version of BERT, uses an attention mechanism to capture subtle meanings and long-range dependencies in text. This model, particularly effective with the informal language of social media, was specifically fine-tuned on the dataset used in this project.

The main hyperparameters selected for optimization were:

Learning rate

Batch size

Professional Use of MLflow via DagsHub

I used MLflow, through the DagsHub platform, to systematically track all experiments carried out:

Automatic logging of training parameters, metrics, and results

Quick and precise comparison of model performance across runs

Facilitated reproducibility and traceability of all experiments

Model Performance and Final Selection

Here is a comparison of the performance results from our tests:

DistilBERT was ultimately selected due to its superior performance, its ability to capture linguistic subtleties, and a model size that remains reasonable for deployment.

The second-best option would be the raw RNN — without any data preprocessing.

Technical Deployment and Automation via API

An API and UI were developed to ensure modularity and operational efficiency:

Backend with FastAPI: handles prediction requests and returns the predicted class along with associated probabilities.

Frontend with Streamlit: an interactive user interface, including an alert feature allowing users to report misclassifications.

Both components were containerized with Docker to ensure portability and consistent behavior across different environments.

Automation and Continuous Deployment

GitHub was used for source code management, while GitHub Actions automated unit testing and Docker image creation.These images were then stored on DockerHub and automatically deployed to Azure, ensuring a robust Continuous Integration and Deployment (CI/CD) pipeline.

Alert System

Azure Insights completes this architecture by providing alerts in the event of frequent incidents or repeated malfunctions reported through the frontend API.

In our example, if three tweets are flagged as misclassified within five minutes, an alert is sent via email and SMS to my inbox and phone.

Post-Deployment Monitoring

The recommended actions for post-deployment monitoring and production use are as follows:

In the event of a significant increase in alerts, a human review of the flagged data can be triggered to determine whether model retraining is necessary.A minimum threshold of 0.1% to 1% of the original dataset (i.e., between 1,600 and 16,000 tweets) is required to justify retraining.

(1365 words)

Comments